Egocentric Manipulation Interface

Learning Active Vision and Whole-Body Manipulation from Egocentric Human Demonstrations

Anonymous Authors, Under Peer Review

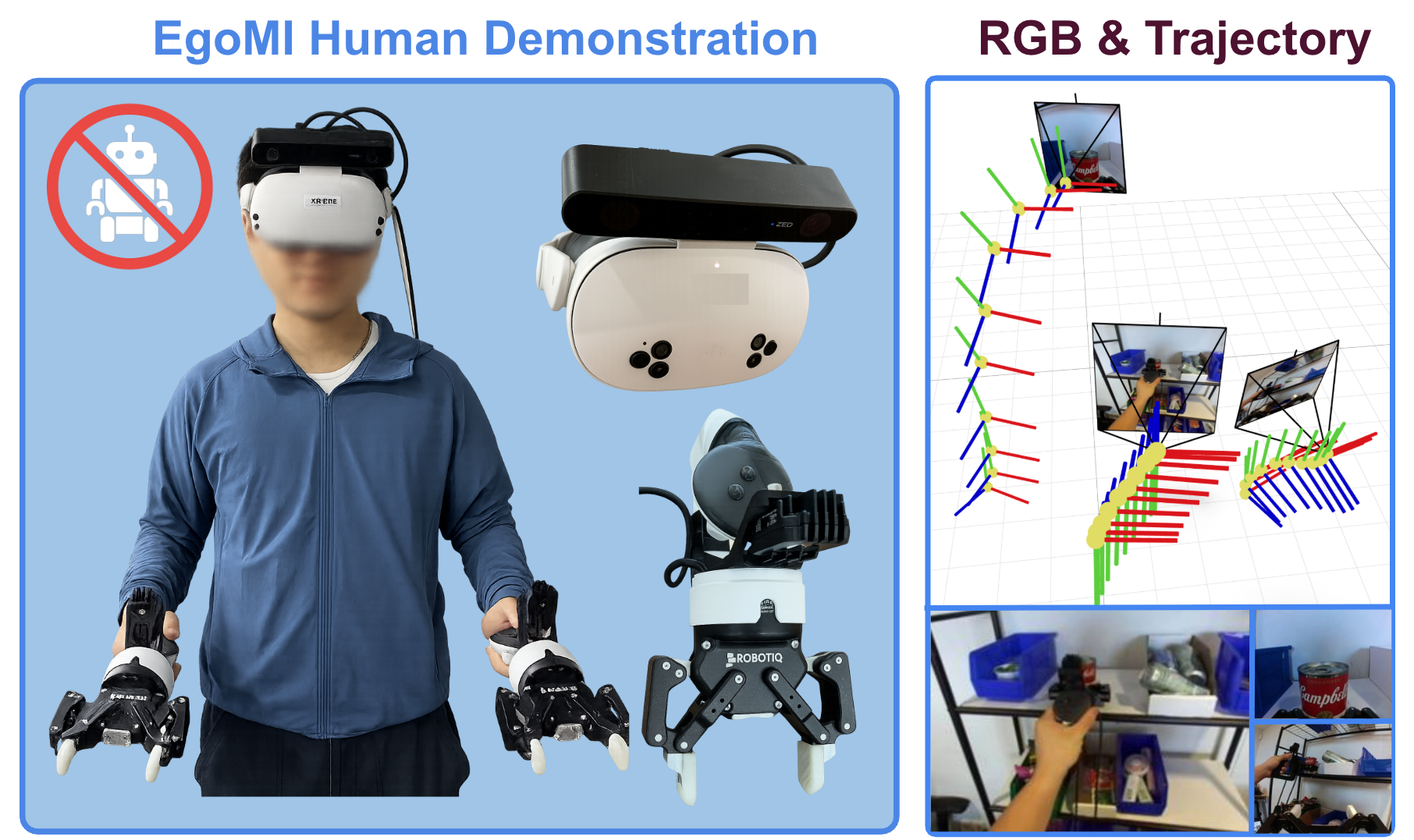

EgoMI is a scalable framework for collecting and deploying egocentric human demonstration data to train and retarget whole-body + active vision manipulation policies - without requiring robot hardware for teleoperation.

Abstract ▾(click to expand)

Real World Policy Rollouts

←

→

Click a thumbnail to change the interactive view above.

EgoMI Device

The EgoMI device records egocentric human demonstrations, capturing synchronized head, hand, and visual data to bridge the human-robot embodiment gap. It combines a Meta Quest 3S headset for head and hand tracking with a camera mounted above the headset for egocentric head observation and neck action retargeting. Each hand controller includes a small wrist-mounted camera and a standard mechanical flange mount for a robot gripper. During collection, the controller triggers actuate the drive-by-wire grippers, letting any user perform real bimanual manipulation tasks naturally. The resulting dataset consists of synchronized head and hand motion, and video streams that can be retargeted directly to robots with no teleoperation or on-embodiment data required.

The EgoMI device records egocentric human demonstrations, capturing synchronized head, hand, and visual data to bridge the human-robot embodiment gap. It combines a Meta Quest 3S headset for head and hand tracking with a camera mounted above the headset for egocentric head observation and neck action retargeting. Each hand controller includes a small wrist-mounted camera and a standard mechanical flange mount for a robot gripper. During collection, the controller triggers actuate the drive-by-wire grippers, letting any user perform real bimanual manipulation tasks naturally. The resulting dataset consists of synchronized head and hand motion, and video streams that can be retargeted directly to robots with no teleoperation or on-embodiment data required.

Spatial Aware Robust Keyframe Selection (SPARKS)

SPARKS is a lightweight training-free keyframe selection algorithm that equips imitation-learning policies with spatial memory. Human head motion produces rapid viewpoint shifts, causing critical task information to appear under past perspectives. SPARKS scores and selects keyframes based on viewpoint novelty, temporal recency, and motion smoothness, retaining only the most informative head-camera images. This memory buffer provides the policy with essential spatial context across time—without recurrent or learned models. SPARKS allows robots to recall past viewpoints during manipulation, yielding more stable, context-aware performance under occlusion, motion, and long-horizon tasks.Experimental Randomization Distribution

Randomization distribution and example initial configuration of the tabletop environment highlighting the

distribution of object positions and clutter scenarios used during

evaluation rollouts. (Right) Initial configurations for target object

may be outside of the immediate field of view of the initialized robot

during experimentation. Target object and placement location may

also reside on opposite ends of the workspace requiring a bimanual

handoff maneuver.

Randomization distribution and example initial configuration of the tabletop environment highlighting the

distribution of object positions and clutter scenarios used during

evaluation rollouts. (Right) Initial configurations for target object

may be outside of the immediate field of view of the initialized robot

during experimentation. Target object and placement location may

also reside on opposite ends of the workspace requiring a bimanual

handoff maneuver.